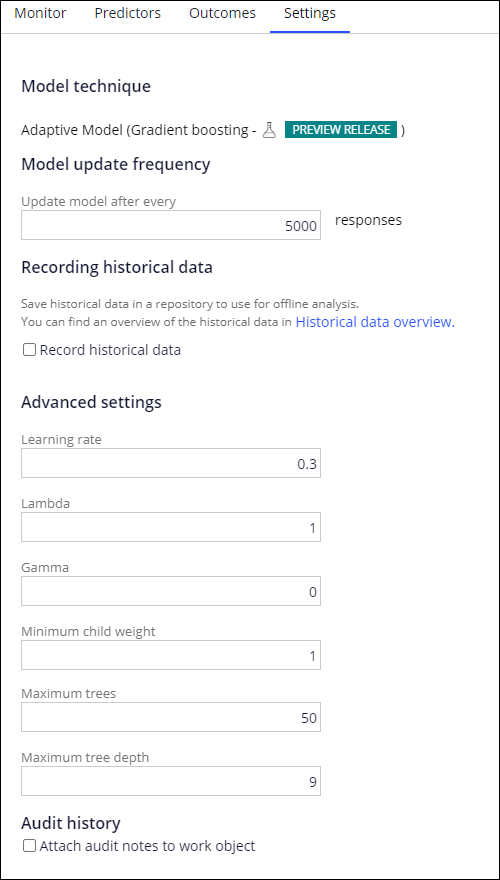

Configuring advanced settings for an adaptive model based on gradient boosting

Configure advanced settings that control the operation and learning of an adaptive boosting model. Properly configured adaptive boosting models are more likely to return relevant results.

- In the Learning rate field, enter an ETA (shrinkage

factor) numeric value between (but larger than) 0 and

1. This parameter prevents over-fitting by slowing down the learning process of the model. A smaller value (closer to 0) means that each tree has a small weight on the prediction score of the model. This implies that more trees are necessary to get a better prediction performance. A higher ETA value (closer to 1) means that less trees are going to be learned, because the weight of individual trees is high. The recommended and default value is 0.3.

- In the Lambda field, enter a numeric value for

regularization of the model on leaf weights. This value adds a penalty when the

model complexity increases.Lambda is also used for the calculation of gain, which is the quality metric at the node level. Increasing Lambda will make the model split more conservatively, because the gain will be lower than the gain calculated without Lambda. The recommended and default value is 1.

- In the Gamma field, enter a numeric value for the

complexity threshold that controls splits and prune.

Gamma is the threshold for the minimum gain a node needs before we allow it to split. It is also a threshold for pruning, and defines the minimum gain a node needs to have to not be considered for pruning. A low gamma value will force the model to split or prune more often.

The recommended and default value is 0. - In the Minimum child weight field, enter a numeric value

to constrain a possible node split.Minimum child weight designates the minimum sum of instance weight (hessian) needed in a child. If the tree partition step results in a leaf node with the sum of instance weight less than

min_child_weight, then the building process will stop further partitioning. In linear regression, this corresponds to the minimum number of instances needed in each node. The larger the value, the more conservative the algorithm will be, forcing to not split on that node. The recommended and default value is 1. - In the Maximum trees field, enter the maximum number of

trees that can be added to the model, between 1 and

500.For a model containing shallow trees (maximum tree depth), maximum trees should allow the creation of a high number of trees, so that a new tree is created whenever possible. This will also enable generalization of the model. Increasing the value will increase the scoring time of the model. The recommended and default value is 50.

- In the Maximum tree depth field, enter the maximum depth

of a single tree, between 1 and

14.The higher the value, the more complex the tree. A low depth value results in shallow trees, because the trees will not grow more than the predefined value. This is desirable in the adaptive boosting model. The maximum tree depth parameter should correspond to the maximum trees value. Increasing the value will increase the scoring time of the model. The recommended and default value is 9.

- Adaptive gradient boosting overview

Self-learning predictive models in Adaptive Decision Manager play a crucial part in a Next-Best-Action strategy. Adaptive models predict the propensities of all the available actions and so provide highly personalized and relevant actions to each individual customer, achieving true 1:1 customer engagement.

Previous topic Configuring advanced Bayesian model settings Next topic Adaptive gradient boosting overview