Machine-learning models for text analytics

You can use Pega Platform to analyze unstructured text that is contained in different channels such as emails, social networks, chats, and so on. You can structure and classify the analyzed data to derive useful business information to help you retain and grow your customer base.

To effectively analyze textual data in your application, for example, through a chatbot, build a text analytics model and include it in a text prediction. The most efficient text analytics models are built by using various machine-learning algorithms.

Advantages of machine learning

The basic approach for analyzing textual data is to build a set of rules and patterns to classify text into categories or to detect entities. However, rule-based techniques are ineffective in the long run because they require continuous updating to accommodate changes (such as new business scenarios or use cases) or to handle exceptions. The volume of data, language complexity, different types of communication, slang, emoticons, and other factors make the task of maintaining rules or patterns very time-consuming and ineffective.

With machine learning, you provide the training data and the model learns to classify textual data according to the selected algorithm. Additionally, you can accommodate for changes or improve the classification efficiency by providing feedback to the training data or by using a different algorithm, which is easier than maintaining an ever-increasing number of complex rules or patterns.

Model types

Depending on the type of text analysis that you want to perform in your application, you can choose a categorization model or an extraction model.

- Categorization models

- Build a categorization model to classify a piece of text into a single group or multiple groups. For example, the sentence I just can't wait for FIFA World Cup 2018! is likely to be classified as belonging to the user-defined sports category rather than politics, health, or economics. Each outcome with which a piece of text is associated as a result of categorization is called a category.

- For more information, see Best practices for creating categorization models.

- Extraction models

- Build an extraction model to identify a word or a phrase as structured content and associate metadata with that content. For example, in the sentence Justin Trudeau is the prime minister of Canada, you can tag the phrase Justin Trudeau as a person name. Each piece of text that is tagged with specific metadata is called an entity.

- For more information, see Best practices for creating entity extraction models.

Algorithm tradeoff decisions

Depending on the natural language processing problem that you want to solve and the availability of training data, you can select an algorithm that best suits your needs.

Examples of algorithms and their properties

| Algorithm | Details | When to choose this algorithm |

| Maximum Entropy (MaxEnt) | The Max Entropy classifier is a probabilistic classifier that belongs to the class of exponential models and does not assume that the features are conditionally independent of each other. MaxEnt is based on the principle of maximum entropy and from all the models that fit your training data, the algorithm selects the one that has the largest entropy or uncertainty. The Max Entropy classifier can solve a large variety of text classification problems such as language detection, topic classification, sentiment analysis, and more. | MaxEnt generates models of high accuracy; however, it is slower than Naive Bayes. MaxEnt is the preferred algorithm for text analysis because it makes minimum assumptions in close to real-world scenarios. |

| Naive Bayes | Naive Bayes is a simple but effective algorithm for predictive modeling, with strong independence assumptions between the features. It is the fastest modeling technique. | Use it for faster performance at a cost of a lower accuracy score. |

| Support Vector Machine (SVM) | SVM is a discriminative classifier. It handles nonlinear data by using kernel mapping, implicitly mapping kernel inputs to high-dimensional feature spaces. SVM models are the slowest to build. | This algorithm provides higher-accuracy models and works well on clean data sets. SVM is a slow algorithm. |

| Conditional Random Field (CRF) | CRF is a class of statistical modeling method that is often applied to pattern recognition and used for structured predictions. CRF is a sequence modeling method family. Whereas a discrete model predicts a label for a single sample without considering neighboring samples, the CRF algorithm can take context into account. | The only algorithm for entity extraction. It results in lower false positives compared with other HMM (hidden Markov chain models). |

Life cycle of a model

Each machine-learning model goes through the following stages throughout its life cycle:

- Determining the type of the model that is the most applicable to your use case.

If you want to assign a value to a piece of text, select a categorization model. If you want to extract specific phrases or words from a piece of text, choose an extraction model. - Choosing a data set to train and test the model.

Such a data set consists of pairs of content and result. For example, in sentiment analysis, an example content-result pair consists of a phrase and the associated sentiment (the best salted caramel ice cream I have ever eaten, positive). - Choosing an algorithm.

Pega provides a set of algorithms that you can use to train your classifier for extraction and categorization analysis. Depending on the algorithm that you use, the build times might vary. For example, Naive Bayes performs the fastest analysis of training data sets. However, other algorithms provide more accurate predictions. For more information, see Training data size considerations for building text analytics models. - Splitting the data set into training and test samples.

To verify the prediction accuracy of your model, designate a portion of your data set as a test sample. The test sample is ignored when training the model, but it is used to verify whether the outcome that was predicted by the model matches the outcome that you predicted. The accuracy score is the percent of test samples that the model classified correctly. - Building the model.

- Comparing and evaluating the outcomes.

Inspect your test sample for any mismatches in prediction outcomes. This inspection helps you to modify your training data (for example, by updating it with additional training samples) so that the next time you build your model, the prediction accuracy increases.

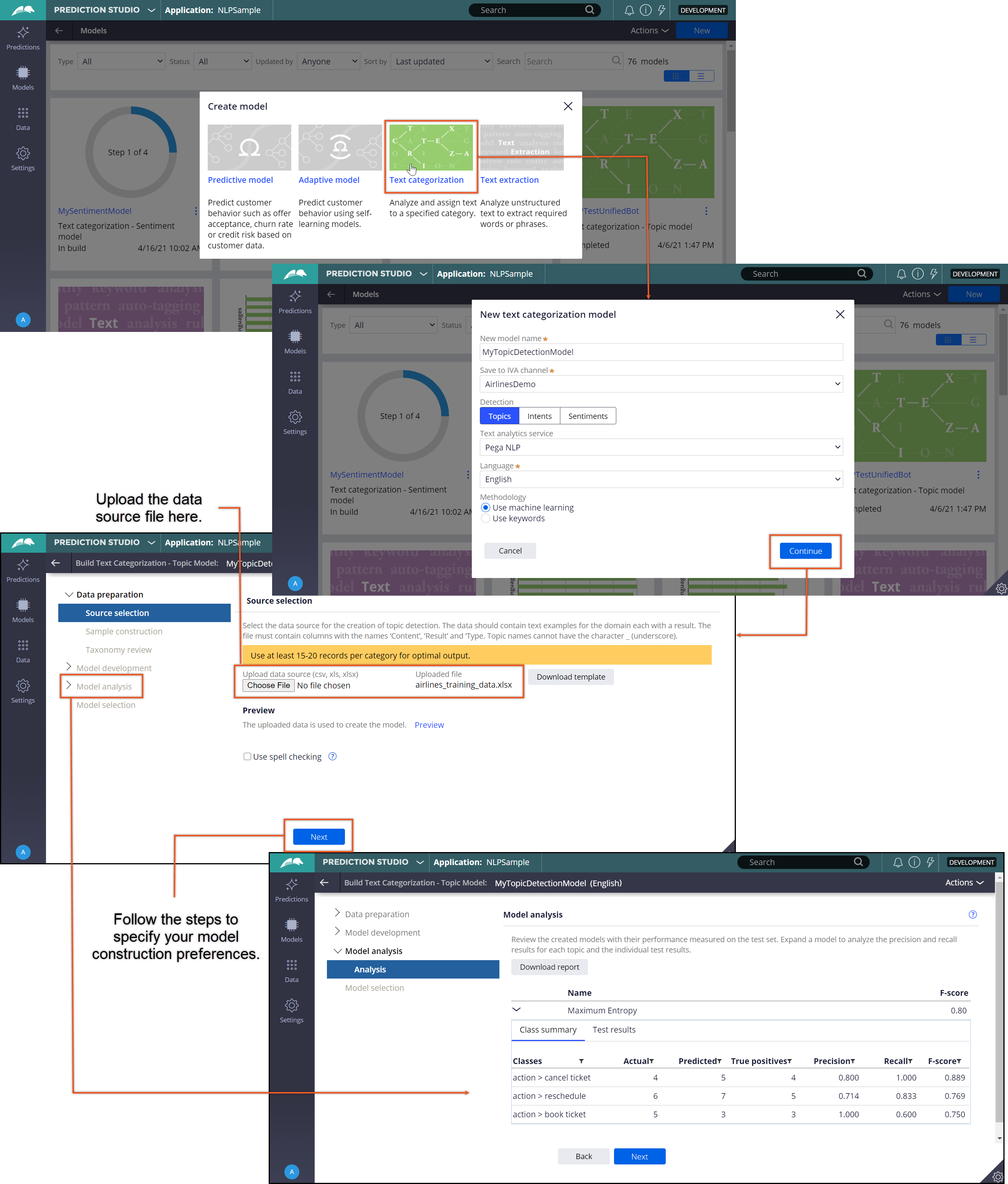

Pega provides a wizard that guides you through the process of building machine-learning models for text analytics, as shown in the following example. The wizard provides a streamlined path for developing models, in which you upload the training and test data set, select an algorithm that best suits your use case, build a model, and review its accuracy. For more information about how to build machine-learning models in Pega Platform, see Labeling text with categories and Extracting keywords and phrases.

Model accuracy calculation

After you build a model, you can determine its efficiency by investigating precision, recall, and F-score measures that are derived by comparing the labels that you predicted (the manual outcome) and the labels that the model predicted (the machine outcome) for each test record. These accuracy measures are calculated according to the following formulas:

- Precision

- The number of true positives divided by the count of machine outcomes (labels that the model predicted).

- Recall

- The number of true positives divided by the count of manual outcomes (labels that you predicted).

- F-score

- The harmonic mean of precision and recall.

The following example shows how precision, recall, and F-score are calculated:

Test example with calculated Precision, Recall and F-score

| Manual outcome count | Machine outcome count | True positives | Precision | Recall | F-score |

| 10 | 12 | 10 | 0.833333333 | 1 | 0.909091 |

For more information, see Text analytics accuracy measures.

Previous topic NLP outcomes database tables Next topic Creating entity extraction rules for text analytics